The Ai Tour Guide

About the project

A gadget that automatically gives you a guided tour wherever you are with a simple command or a press of a button.

Project info

Difficulty: Easy

Platforms: DFRobot, Blues Wireless

Estimated time: 2 hours

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Hardware components

Story

A once in a lifetime vacation was rapidly approaching. My wife and I tend to wander pretty fast from place to place, which is fantastic for seeing as much as possible, but means you don't glean a whole lot in terms of interesting landmark information. Sure, there are signs here and there and you can overhear a tour guide spiel every now and again, but what if you could just have a tour guide with you at all times? With a tour guide in my pocket, I could ask for all the info I could want at anytime and meander at our own pace. So I made one.

The baseline of the code further iterates upon some code I've been using for controlling my smart home. We'll still want to be able to use voice commands and text to speech, check to see if we have wifi and use Blues wireless if not, and so on. We use a Unihiker to run the program itself.

The short of what the program does is generates a tour based on your very specific coordinates. If you don't get accurate coordinates, your tour is going to be of some other location which would be incredibly useless. We use wifi if we have it and blues wireless when we don't. Realistically, most tours will occur when we don't have wifi, which means getting Blues up and running will be critical to our app. So, let's get that working!

The Blues wireless setupI've grown quite comfortable with the Notecarrier-F, which is great because we'll still be using it. However, we're mixing up the notecard itself to accommodate international service. This switch is easy. There's a small screw on the Notecarrier that holds the notecard in place. Unscrew it, plug in the new notecard, reattach the connections, and you're good to go.

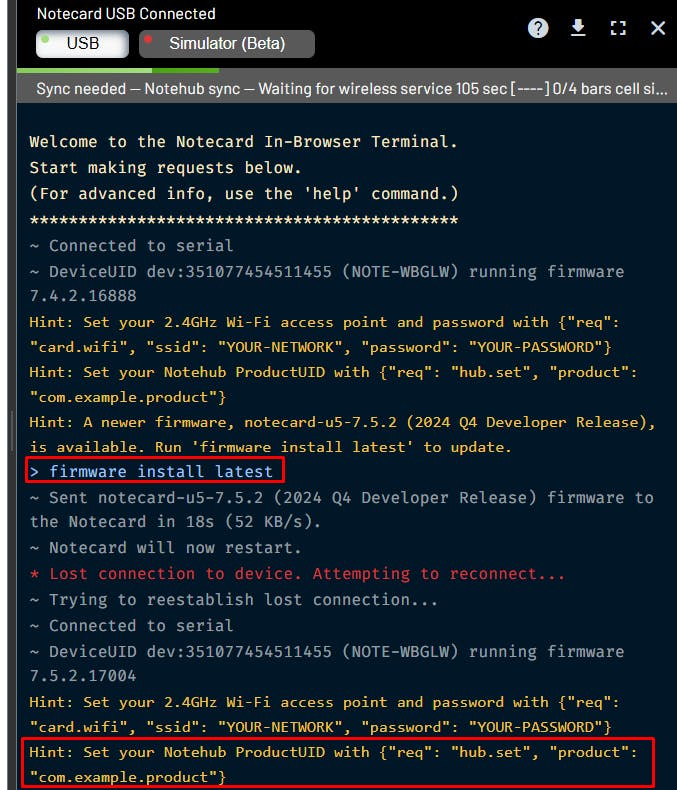

It's a new notecard, so we just need to jump over to the quickstart guide and get it up and running. As the hints in the terminal convey, you'll need to update the firmware, add wifi access, and associate the notecard with your productUID. Create your project and associated it accordingly.

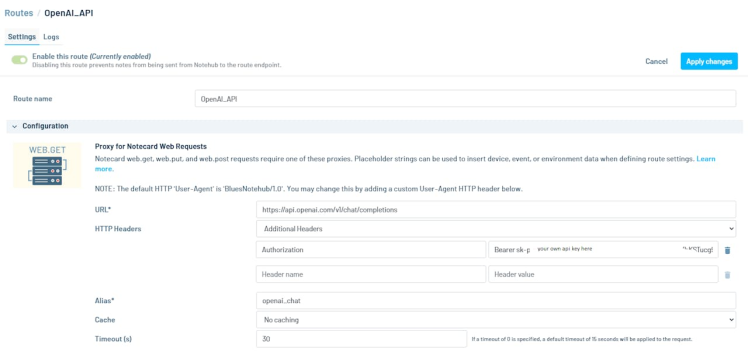

What we are looking to do is setup a route that allows us to make an api call to generate an ai tour. To do this, just click Routes --> Create Route --> Proxy for Notecard Web Requests. We will be using open ai to generate our ai tours. Setting up a call like this is easier than it seems. It should look like the setup below, where obviously you put your own api key in within the authorization field.

The url is https://api.openai.com/v1/chat/completions

For http headers, as shown, we need to include additional headers and add an authorization header. For that field, write "Bearer" and paste in your api key from open ai.

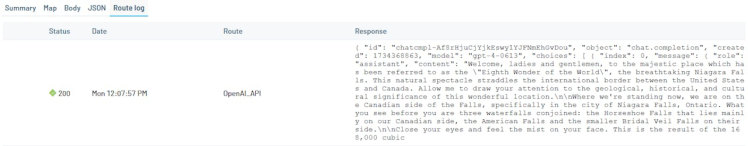

You'll be able to see the calls going through on the Blues side as well. Below is a successful generation of a tour of Niagara Falls (you'll see in the full ai tour guide code that I have an option to use a dummy location - mostly so that I'm not sharing where I live with the world :) ).

As far as wifi setup goes for other cases, you can browse to http://10.1.2.3/pc/network-setting on the device connected to your UNIHIKER and connect it to the wifi from there.

Making the Tour Actually EnjoyableAn AI tour implies text to speech and the built in one I was using before was pretty rough on the ears. Since we're using the open ai api anyway, I used their text to speech api as well. It sounds night and day better. Passing a massive audio file is best done over wifi, though, so I ran quite a lot of tests to determine a good option to use when we aren't on wifi. Tacotron took absolutely forever, Festival sounded decent and ran fast but had some errors, and Pico also sounded decent and ran quickly. So, we use Pico when we aren't on wifi instead of what we were using previously. It obviously isn't as good as open ai's paid api text to speech, but it is way way easier on the ears than the previous one.

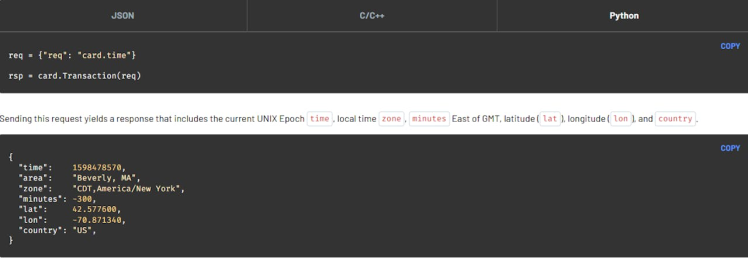

That brings us back to making sure the tour is actually good and relevant, which means getting good gps coordinates. When we're on wifi we use the Google Geolocation api. Otherwise we'd get incredibly unhelpful results if we just used the coordinates associated with the wifi. For when we don't have wifi, we'll obviously be using Blues wireless, but in these cases we can use calls Blues offers to get coordinates. The call I used, as you'll see in the code, was "card.time" because it is super straightforward and the response is concise and includes the user's coordinates.

How it works is we say “Butler, give me a tour” (as well as some similar options), where Butler is the trigger word for processing commands. We tell open ai that it's a tour guide and request a tour in a format that would work well with text to speech. We receive a thorough tour as text and read it out with the text to speech setup I described earlier.

As convenient as it would be to bring my usb speaker so the whole family could hear the tour at once, the headphones felt a lot more reasonable. I don't want to be the guy blaring audio no one else asked for. There's no audio port on the UNIHIKER, but I had already split the usb port for the speaker tests so I was able to just find and use a usb headset just fine. We need the usb splitter because the Notecarrier-f is plugged in via usb as well.

While actually out and about using this thing I did come up with a much need improvement. In retrospect, it should have been obvious but here we are. Instead of exclusively using voice commands, it makes a whole lot more sense to be able to just press a button. It can be a bit awkward to blurt out “Butler, give me a tour”! So, as you'll see in the code, we listen for a button press and start the tour if it is pressed.

Relevant to the code, it will just work right away once you put in your api keys and blues product uid, but you'll need to ensure the relevant libraries are installed.

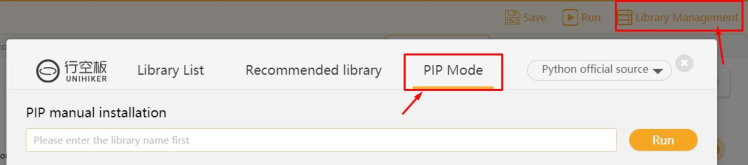

The ones that can be installed via pip are easier, as there is a built in place for installation in Mind+, which is the custom IDE from DFRobot that directly supports the Unihiker. Those installs are below:

pip install speechrecognition

pip install pyttsx3

pip install simpleaudio

pip install pydub

pip install notecard

pip install requests

pip install pinpong

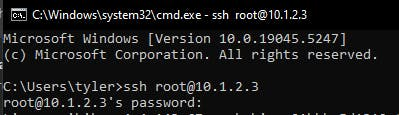

In particular, to get pico text to speech working (for when we don't have wifi), you'll need to install this via ssh. To do so, just go to a terminal and type ssh root@10.1.2.3 while your UNIHIKER is connected to the computer. The password is dfrobot.

Then just run this and you should be good to go:

sudo apt update

sudo apt install -y python3 python3-pip python3-serial

sudo apt install -y alsa-utils

sudo apt install -y espeak-ng pico2wave aplay

ConclusionEven after I got home I couldn't stop myself from further iterating on the code to make it nice, so it should be in a good spot to give you an on-the-go ai tour wherever your travels may take you! I added all the features I thought would be nice, but I'm always curious what spin-offs and enhancements others come up. Please share if you iterate on this project! Regardless, I hope you enjoyed - have a good one.

Leave your feedback...