Mycobot With Jetson Nano Educational Solution

About the project

Jetson Nano is integrated with the world's smallest 6DOF cobot- myCobot as an educational AI Kit

Project info

Difficulty: Difficult

Platforms: ROS, NVIDIA, OpenCV, Elephant Robotics

Estimated time: 1 hour

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Software apps and online services

Story

Elephant robotics-the collaborative robot with computer vision - myCobot with Jetson Nano educational solution

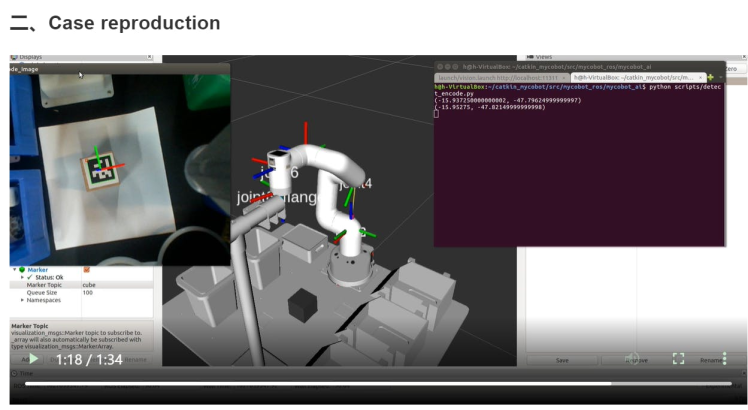

Jetson Nano myCobot DEMO with an AI kit

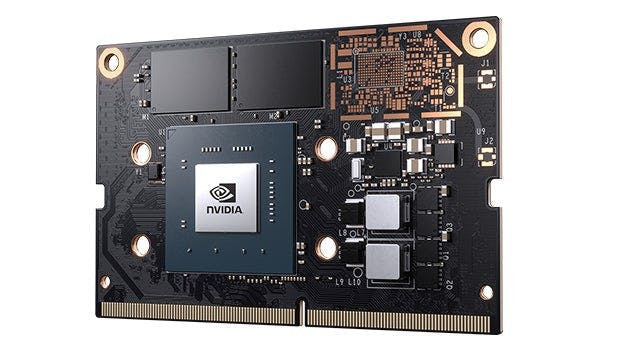

Jetson Nanois a small, powerful computer for embedded applications and AI IoT that delivers the power of modern AI in a $99 (1KU+) module.

Get started fast with the comprehensiveJetPack SDKwith accelerated libraries for deep learning, computer vision, graphics, multimedia, and more. Jetson Nano has the performance and capabilities you need to run modern AI workloads, giving you a fast and easy way to add advanced AI to your next product.

Jetson Nanois a small, powerful computer for embedded applications and AI IoT that delivers the power of modern AI in a $99 (1KU+) module.Get started fast with the comprehensiveJetPack SDKwith accelerated libraries for deep learning, computer vision, graphics, multimedia, and more. Jetson Nano has the performance and capabilities you need to run modern AI workloads, giving you a fast and easy way to add advanced AI to your next product.

Jetson Nano

In this article, you will see this Jetson Nano board from NVIDIA is integrated with the world's smallest 6DOF collaborative robot-myCobot from Elephant Robotics. Through the process of a trial on the Artificial Intelligent Kit, we are thinking up a considerate solution for every robotics learner with this Jetson Nano-myCobot. Following are the details for this project:

IdeasChallenges:- Development and application-oriented to visual recognition

- Control development and application based on ROS platform

- How to build products into phenomenal commercial products

- Full image processing and recognition technology based on OpenCV

- Automatic path planning technology based on ROS and Movelt

- Brand value empowerment

- myCobot-Jetson Nano – The world's smallest Jetson Nano 6DOF robot arm

- Artificial Intelligence Kit– image recognition, ROS

- Peripheral accessories

NVIDIA Products:

- Jetson Nano – 2G

Processing Engines

- vision processing module

- object machine learning engine

- OpenCV

- Ros

- myCobot series

We are integrating Jetson nano into myCobot to extend the success of the raspberry pi and the m5Stack version. The reason is that the cobot is highly matched with computer vision to make applications. Jetson nano should be the best development board to fit this demand.

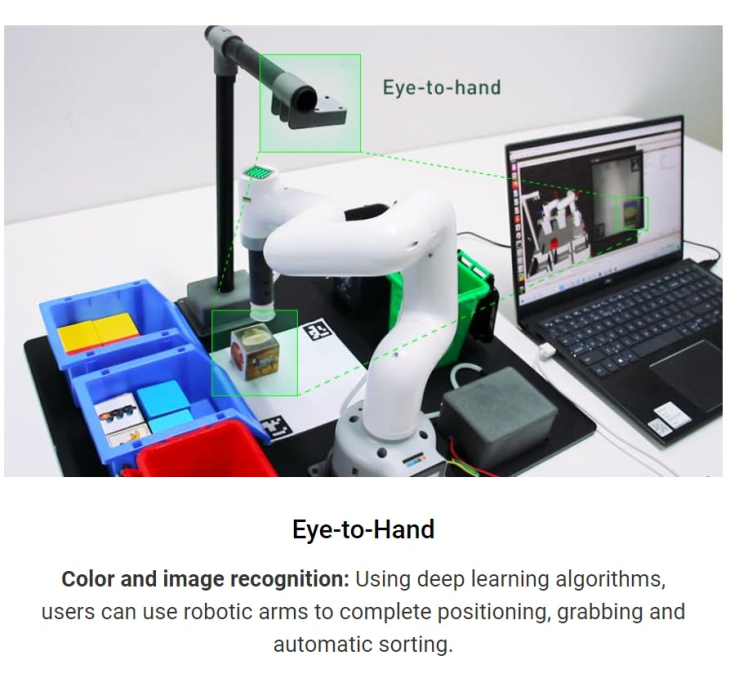

Procedure&CodingThis demo mainly applies colour recognition of Jetson Nano myCobot.

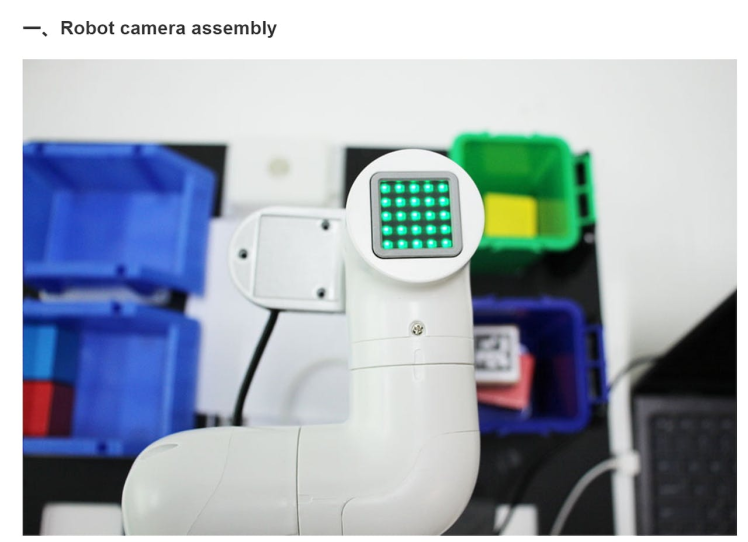

First to adjust the camera and realize positioning, here we are talking about the core part:

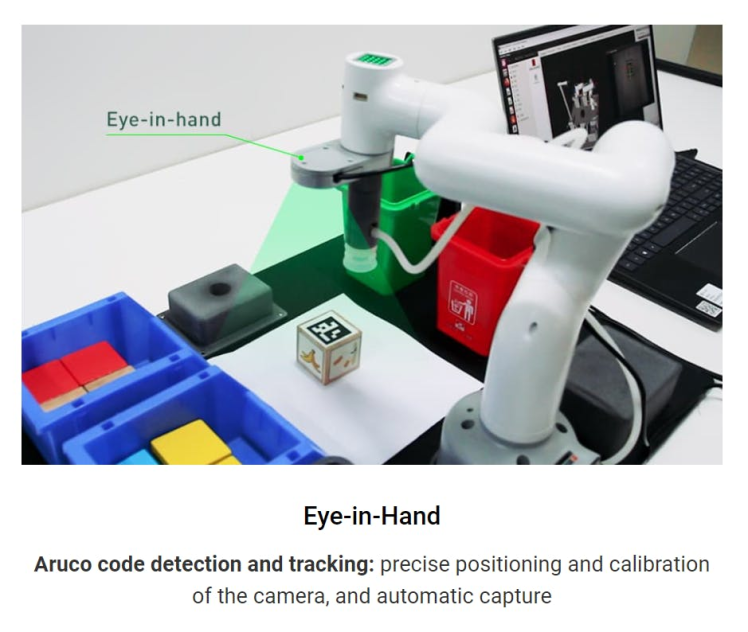

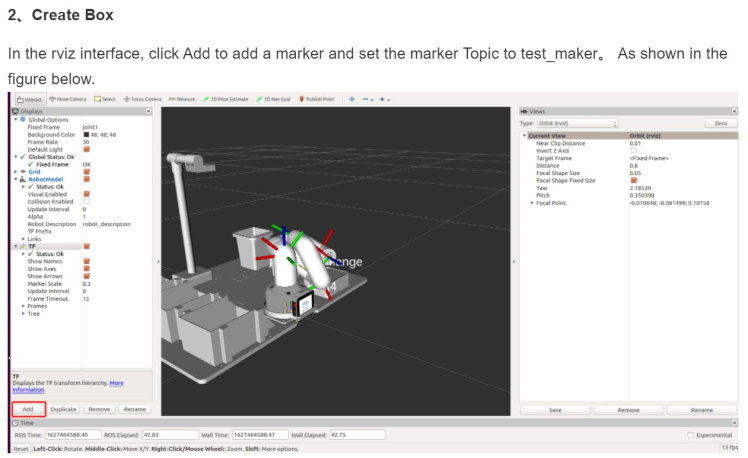

Overview- This case is based on OpenCV and ROS communication control manipulator. First, calibrate the camera to ensure its accuracy of the camera. By identifying two aruco codes in the capture range, the recognition range is intelligently located, and the corresponding relationship between the centre point of the actual recognition range and the video pixel is determined.

- Use the colour recognition function provided by OpenCV to identify the object block and determine the pixel position of the object block in the video, and calculate the coordinates of the object block relative to the centre of the actual recognition range according to the pixel point of the object block in the video and the video pixel point of the centre of the actual recognition range, Then, the relative coordinates of the object block relative to the manipulator can be calculated according to the relative coordinates between the centre of the actual identification range and the manipulator. Finally, a series of actions are designed to grab the object block and place it in the corresponding bucket.

After finishing identifying aruco modules and clipping video module (skip them here), we are coping with the colour recognition module.

• Chroma conversion is performed on the received picture, the picture is converted into a grey picture, and the colour recognition range is set according to HSV initialized by the user-defined class.

• Corrode and expand the converted grey image to deepen the colour contrast of the image. Identify and locate the colour of the object block through filtering and checking the contour. Finally, through some necessary data filtering, colour blocks are framed in the picture.

def color_detect(self, img): x = y = 0for mycolor, item in self.HSV.items(): redLower = np.array(item[0]) redUpper = np.array(item[1])# Convert picture to gray picture hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)# Set color recognition range mask = cv2.inRange(hsv, item[0], item[1])# The purpose of etching the picture is to remove the edge roughness erosion = cv2.erode(mask, np.ones((1, 1), np.uint8), iterations=2)# Expand the picture to deepen the color depth in the picture dilation =cv2.dilate(erosion, np.ones((1, 1), np.uint8), iterations=2)# Add pixels to the picture target = cv2.bitwise_and(img, img, mask=dilation)# Turn the filtered image into a binary image and put it in binary ret, binary = cv2.threshold(dilation, 127, 255, cv2.THRESH_BINARY)# Obtain the image contour coordinates, where contour is the coordinate value. Here, only the contour is detected contours, hierarchy = cv2.findContours( dilation, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)if len(contours) > 0:# Deal with the misidentification boxes = [ boxfor box in [cv2.boundingRect(c) for c in contours]if min(img.shape[0], img.shape[1]) / 10< min(box[2], box[3])< min(img.shape[0], img.shape[1]) / 1 ]if boxes:for box in boxes: x, y, w, h = box# Find the largest object that meets the requirements c = max(contours, key=cv2.contourArea)# Obtain the lower left and upper right points of the positioning object x, y, w, h = cv2.boundingRect(c)# Frame the block in the picture cv2.rectangle(img, (x, y), (x+w, y+h), (153, 153, 0), 2)# Calculate Block Center x, y = (x*2+w)/2, (y*2+h)/2# Judge what color the object isif mycolor == "yellow":self.color = 1elif mycolor == "red":self.color = 0

def color_detect(self, img): x = y = 0for mycolor, item in self.HSV.items(): redLower = np.array(item[0]) redUpper = np.array(item[1])# Convert picture to gray picture hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)# Set color recognition range mask = cv2.inRange(hsv, item[0], item[1])# The purpose of etching the picture is to remove the edge roughness erosion = cv2.erode(mask, np.ones((1, 1), np.uint8), iterations=2)# Expand the picture to deepen the color depth in the picture dilation =cv2.dilate(erosion, np.ones((1, 1), np.uint8), iterations=2)# Add pixels to the picture target = cv2.bitwise_and(img, img, mask=dilation)# Turn the filtered image into a binary image and put it in binary ret, binary = cv2.threshold(dilation, 127, 255, cv2.THRESH_BINARY)# Obtain the image contour coordinates, where contour is the coordinate value. Here, only the contour is detected contours, hierarchy = cv2.findContours( dilation, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)if len(contours) > 0:# Deal with the misidentification boxes = [ boxfor box in [cv2.boundingRect(c) for c in contours]if min(img.shape[0], img.shape[1]) / 10< min(box[2], box[3])< min(img.shape[0], img.shape[1]) / 1 ]if boxes:for box in boxes: x, y, w, h = box# Find the largest object that meets the requirements c = max(contours, key=cv2.contourArea)# Obtain the lower left and upper right points of the positioning object x, y, w, h = cv2.boundingRect(c)# Frame the block in the picture cv2.rectangle(img, (x, y), (x+w, y+h), (153, 153, 0), 2)# Calculate Block Center x, y = (x*2+w)/2, (y*2+h)/2# Judge what color the object isif mycolor == "yellow":self.color = 1elif mycolor == "red":self.color = 0

# Judge whether the identification is normalif abs(x) + abs(y) > 0:return x, yelse:return None

Then it comes to grab implementation module. This part is pre-positioned, the procedure is relatively simple and we skip it here.

Location calculationNext to location calculation:

• By measuring the pixel positions of two aruco in the capture area, the pixel distance M1 between two aruco can be calculated, and the actual distance M2 between two aruco can be measured, so that we can obtain the ratio of pixels to actual distance ratio = m2 / M1.

• We can calculate the pixel difference between the colour object block and the centre of the capture area from the picture, so we can calculate the relative coordinates (x1, Y1) of the actual distance of the object block from the centre of the capture area.

• Add the relative coordinates*(x1, Y1)* from the centre of the gripping area to the manipulator (X2, Y2) to obtain the relative coordinates (X3, Y3) of the object block to the manipulator. The specific code implementation can view the program source code*.(Check on Github)*

myCobot-Jetson Nano – jointly produced by Elephant Robotics and NVIDIA, is the world's smallest Jetson Nano six-axis cooperative robot arm. It is small in size but powerful. It can be matched with a variety of end effectors to adapt to a variety of application scenarios and redeveloped according to users' personalized customization.

NVIDIA value

Elephant Robotics cooperates with NVIDIA to create breakthrough products and create the world's smallest Jetson Nano six-axis cooperative manipulator to provide high-quality products at a competitive and acceptable price.

The robot arm embedded in Jetson Nano is a compact AI computer with ultra-high performance and power consumption. It can run modern AI workload, run multiple neural networks in parallel, and process data from multiple high-resolution sensors at the same time.

It is an ideal tool to start learning AI and robotics. The perfect software driver library includes in-depth learning, computer vision, graphics, multimedia and other aspects, which can help you get started quickly.

Service and VisionWe are committed to providing highly flexible cooperative robots, easy to learn operating systems and intelligent automation solutions for robot education and scientific research institutions, business scenarios and industrial production. Its product quality and intelligent solutions have been unanimously recognized and praised by several factories from the world's top 500 enterprises, such as South Korea, Japan, the United States, Germany, Italy and France etc.

About UsStarted up in Shenzhen, China in 2016, Elephant robotics is a high-tech enterprise focusing on robot R & D, design and automation solutions.

Elephant Robotics adheres to the vision of "Enjoy Robots World", advocates the collaborative work between humans and robots, makes the robot a good helper for human work and life, and helps humans create a better new life.

In the future, Elephant Robotics hopes to promote the development of the robot industry through a new generation of cutting-edge technology and jointly open a new era of automation and intelligence with customers and partners.

Official website link:https://www.elephantrobotics.com/en/

NVIDIA: https://www.nvidia.com/en-us/

Be the first to share what you think!

Code

Credits

Elephant Robotics

Elephant Robotics is a technology firm specializing in the design and production of robotics, development and applications of operating system and intelligent manufacturing services in industry, commerce, education, scientific research, home and etc.