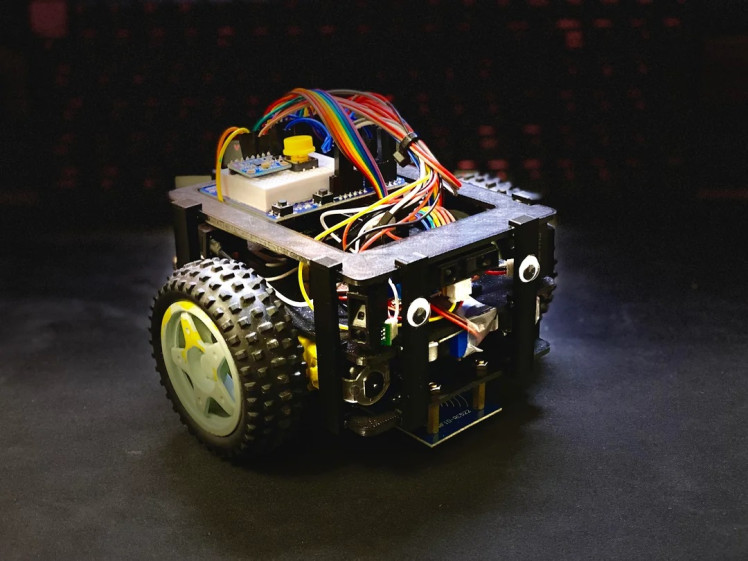

Jerry 3.0: Our Esp32-powered Maze-solving Robot

About the project

Jerry 3.0 is a compact maze-solving robot (16×16 cm) powered by an ESP32 microcontroller. It uses IR sensors, an RFID reader (SPI), and a gyroscope for navigation. A WiFi-based web interface allows real-time PID tuning and profile switching during testing. Ready for competition!

Project info

Difficulty: Moderate

Platforms: PlatformIO

Estimated time: 1 month

License: GNU General Public License, version 3 or later (GPL3+)

Items used in this project

Hardware components

View all

Story

Our project, Jerry 3.0, is an autonomous maze-solving robot designed to navigate complex labyrinths using a combination of sensors, algorithms, and real-time control features. The robot is compact, measuring just 16×16 cm, and is equipped with an RFID reader, infrared distance sensors, and a gyroscope to interpret its surroundings and make navigation decisions. Jerry 3.0 was developed for the "Mobile Robots in the Maze" competition at Óbuda University in Hungary, where robots compete to solve mazes as quickly and efficiently as possible. This is the third iteration of our robot, and it represents significant advancements in both hardware and software compared to previous versions.

The decision to build Jerry 3.0 stems from our passion for robotics and problem-solving. The "Mobile Robots in the Maze" competition provides an excellent platform to challenge ourselves and apply theoretical knowledge in a practical setting. Over the past two years, we’ve learned a lot from participating in this event. Our first robot struggled with precision due to slippage and sensor inaccuracies, while last year’s version improved but still faced limitations in turning radius and sensor reliability. These challenges motivated us to design a more advanced robot that could overcome these issues.

This year, we decided to transition from Arduino-based controllers to an ESP32 microcontroller, which allowed us to implement more sophisticated features like real-time WiFi monitoring and tuning. We also wanted to explore new technologies such as infrared-based wall detection while improving the robot’s overall efficiency and adaptability.

How does it work?

Jerry 3.0 navigates mazes by combining data from multiple sensors with intelligent algorithms for pathfinding and obstacle avoidance. Here’s how it works in detail:

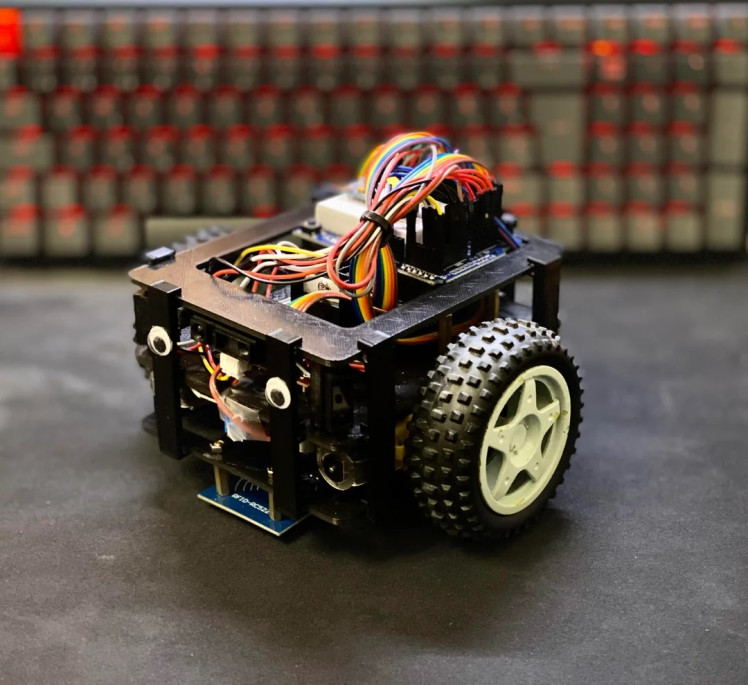

Sensor Suite

The robot uses three infrared (IR) distance sensors to measure its proximity to walls and obstacles. Two sensors are mounted on the sides for wall alignment, while one is positioned at the front for obstacle detection. A Kalman filter processes the sensor data to reduce noise and improve accuracy.

An RFID reader (MFRC522 module connected via SPI) detects tags placed throughout the maze that contain directional instructions (e.g., turn left or right). These tags guide Jerry through predefined paths or help it make decisions in ambiguous situations.

The MPU-6050 gyroscope provides precise measurements of rotation angles during turns, ensuring accurate navigation even in tight corners.

Control System

At the heart of Jerry 3.0 is an ESP32 microcontroller (Wemos D1 R32 board), which handles all sensor inputs, motor control, and decision-making logic. The ESP32’s dual-core architecture allows one core to manage sensor processing while the other handles communication tasks like hosting a web interface.

Web Interface

One of Jerry’s standout features is its WiFi-based web interface hosted on the ESP32 in SoftAP mode. This interface allows us to connect directly to the robot using a smartphone or laptop during testing. Through this interface, we can monitor live sensor data (such as wall distances), adjust PID parameters on-the-fly, and load different operational profiles (e.g., "sprint mode" for speed-focused runs or conservative settings for obstacle-heavy mazes). This feature has been invaluable for fine-tuning Jerry’s behavior without needing constant reprogramming.

Navigation Algorithm

Jerry follows a hybrid wall-following algorithm:

If walls are present on both sides, it centers itself using PID control.

If only one wall is available, it maintains a fixed distance from that wall.

If no walls are detected, it uses gyroscope data to maintain its heading.

The RFID reader provides additional guidance by decoding directional commands embedded in maze tags.

Movement

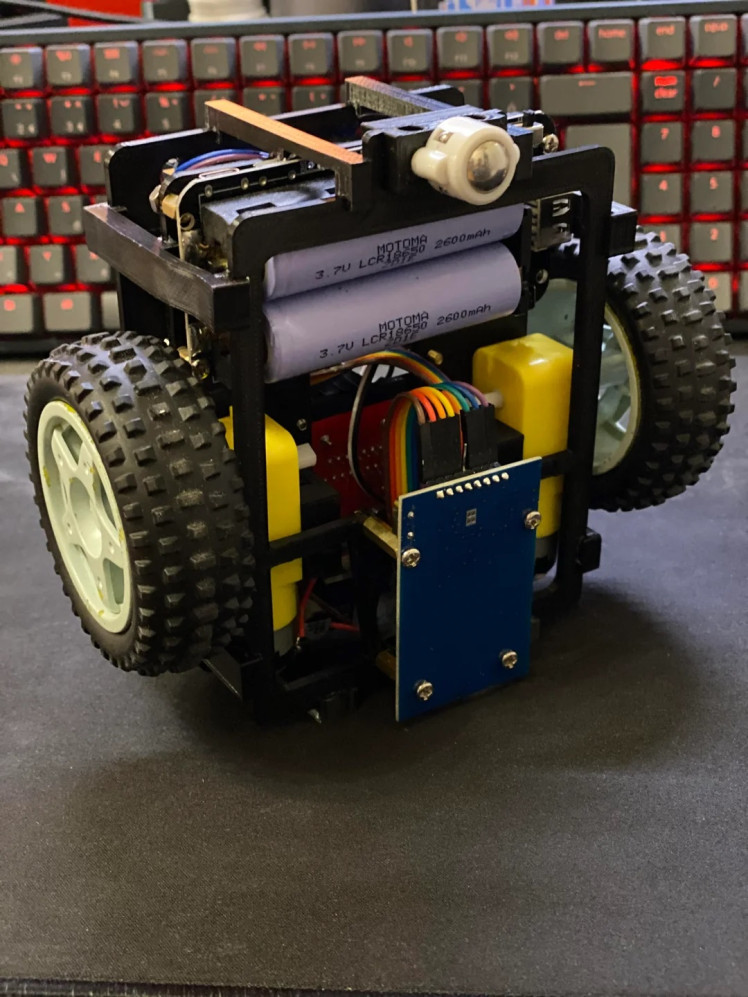

Jerry’s movement is powered by two DC motors controlled by an L298N motor driver. The motors enable tank-style steering, allowing Jerry to turn in place or move forward/backward with precision. The robot’s turning radius has been optimized to less than 17 cm.

Power Supply

Two NCR18650B Li-ion batteries provide power for all components, regulated by an XL6009 buck-boost converter.

Jerry 3.0 combines compact design with advanced features like real-time WiFi monitoring, RFID navigation, and precise sensor fusion techniques. It represents our team’s dedication to improving upon previous designs while exploring new technologies in robotics. We’re excited to see how Jerry performs at the competition!

Leave your feedback...