Emotional Analysis For The Visually Impaired

About the project

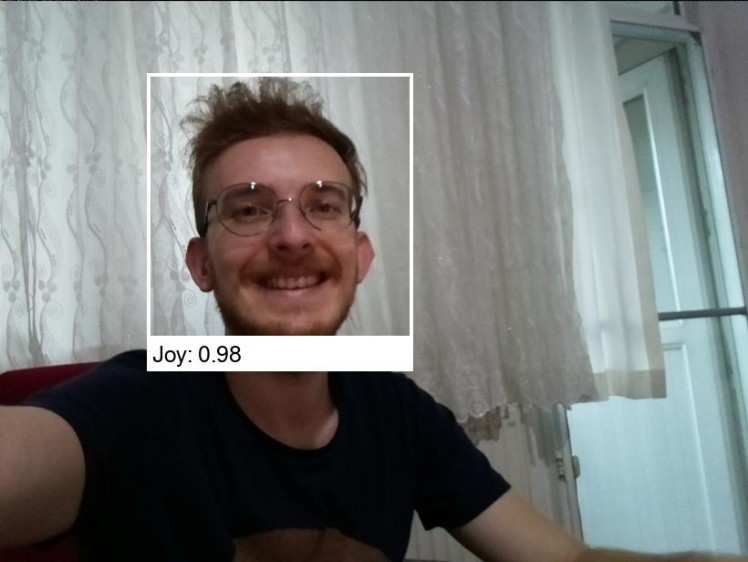

Visually impaired people cannot clearly understand the feelings of the person they are talking to. With this project, they can easily understand the thoughts and feelings of the people they are talking about. I'm using Google Joy Detector software with Google Text to Speech api.

Project info

Difficulty: Moderate

Platforms: Google, Raspberry Pi, Windows

Estimated time: 4 hours

License: GNU General Public License, version 3 or later (GPL3+)

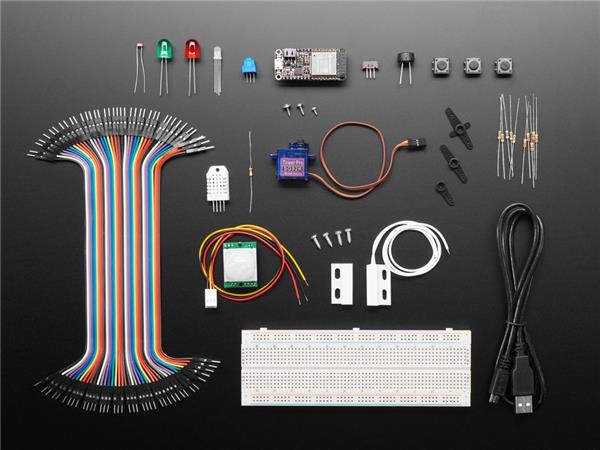

Items used in this project

Hardware components

Software apps and online services

Story

Before starting all things, I would like to state that I had great difficulty in developing the project due to my health problems. But don't worry, I'm so much better now and I keep getting better.

When I saw the concept of the contest, I decided to make this idea. I thought how difficult my life would be if I were a visually impaired. With the help of the camera on the Google AIY Vision kit and the Google Joy Detector, the person's emotions can be understood.

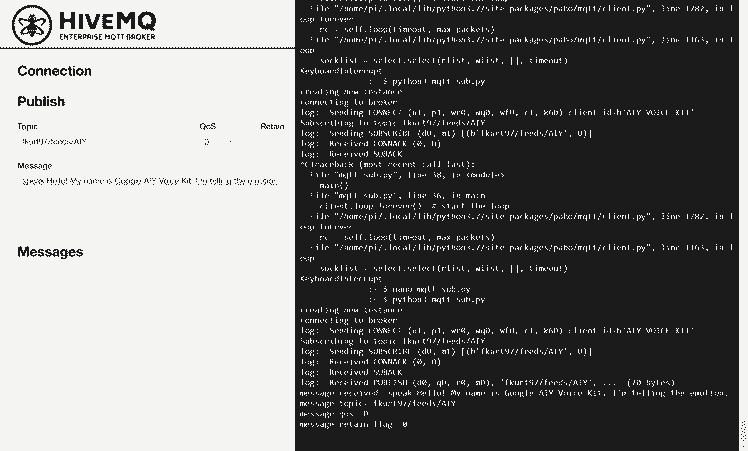

Since the software versions of the Raspberry Pi cards in both kits are old, I had to update them first.I send the emotion data I received from Vision Kit to the Google AIY Voice Kit with MQTT. I decided to use MQTT, which is the most widely used method for the internet of things, for fast communication between two devices. Vision kit transmits emotion data to the voice kit. Voice kit outputs the data it receives as speech. I needed a broker for MQTT. I used IO Adafruit MQTT service, one of Adafruit's services, as it is a free data transfer service for students. I developed an MQTT Publish code in Python language for Vision Kit. This code works with Joy Detector and publishes the emotion analysis to the MQTT broker. On the Voice kit side, I developed an MQTT Subscriber code in Python language. This code works with AIY Kit's Text to Speech library. It converts incoming emotion analysis data into speech.

You can see my Python codes on my GitHub Repo.

Leave your feedback...