4 Steps To Develop An Edge-ai Road Segmentation Cam

About the project

Good for Ride-share / Auto-pilot / Self-driving Applications.

Project info

Difficulty: Easy

Platforms: Intel, Raspberry Pi, ON Semiconductor

Estimated time: 1 hour

License: Apache License 2.0 (Apache-2.0)

Items used in this project

Hardware components

Software apps and online services

Story

Nowadays what is the hottest, promising, and exciting technology segmentation? Metaverse? No. I would say it is self-driving, accounting for the concepts, products, and stock prices at Nasdaq:). Right now there have been many excellent electronic vehicle companies, for example, Tesla, Rivian, Li Auto, etc, and all of them provide ADAS(Advanced Driving Assistance System)

When we talk about ADAS technology, one common area is perception, which means to sense, see, view, feel the surroundings using computer vision, deep learning, machine learning, image sensors, Lidars, etc. Sounds complicated? Yes. But to have a step-in concept of this, we can do a simple and common road segmentation demo and see how to develop this kind of application.

Details

Below we will show how to develop a road segmentation demo using OpenNCC and its open-source SDK. The deployed road segmentation was originally downloaded from Intel OpenVINO's pre-trained model. For better performance, you can replace the model with your own-trained one, but the developing steps are the same.

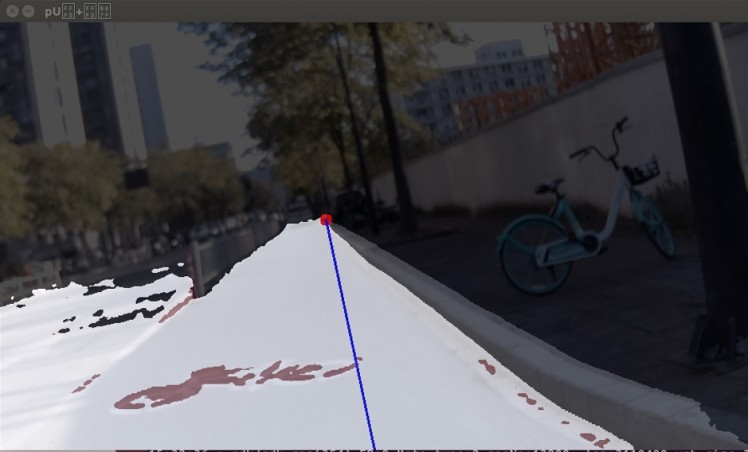

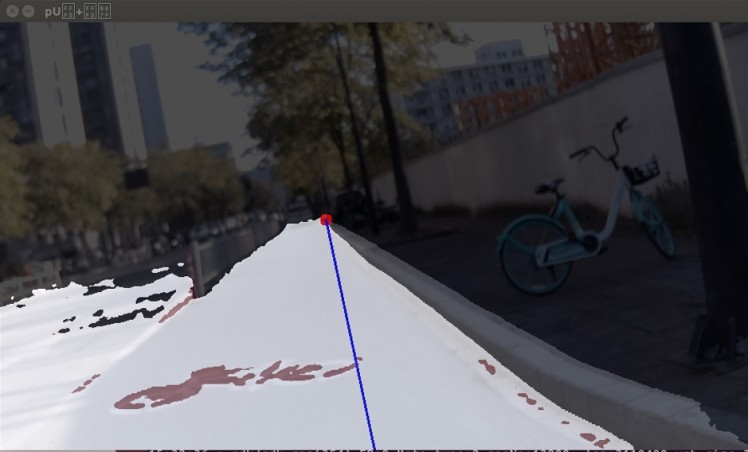

In addition to the analytical implementation of the segmentation model, it also judges the road direction through corner recognition and points distance analysis.

Configure the PC

The following commands are operated on the PC. Currently, we support Ubuntu versions greater than 16.04.

- Install libusb and ffmpeg

$ sudo apt-get update

$ sudo apt-get install libgl1-mesa-dev -y

$ sudo apt-get install unixodbc -y

$ sudo apt-get install libpq-dev -y

$ sudo apt-get install ffmpeg -y

$ sudo apt-get install libusb-dev -y

$ sudo apt-get install libusb-1.0.0-dev -y

- Clone openncc repo

$ git clone https://gitee.com/eyecloud/openncc.git

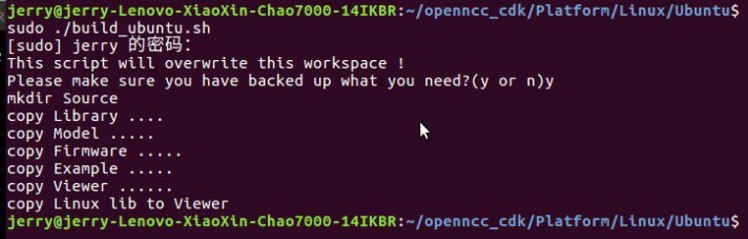

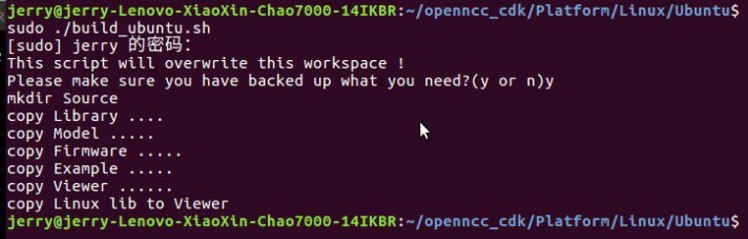

- Build environment(ubuntu)

$ cd openncc/Platform/Linux/Ubuntu

$ ./build_ubuntu.sh

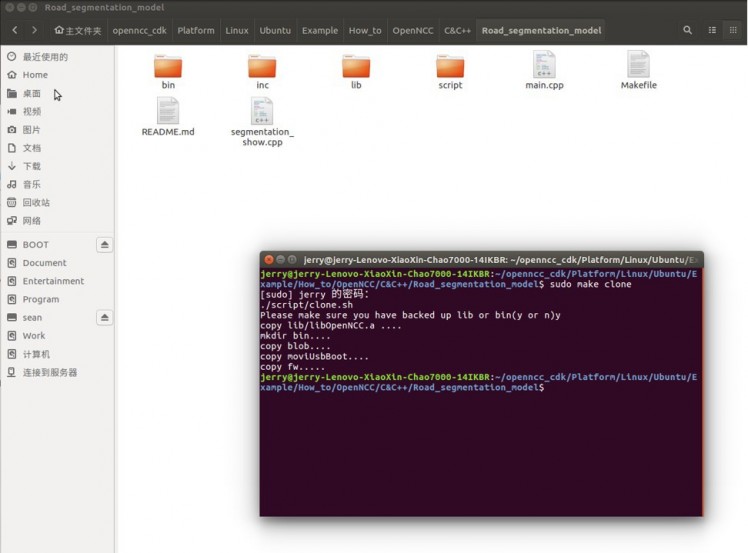

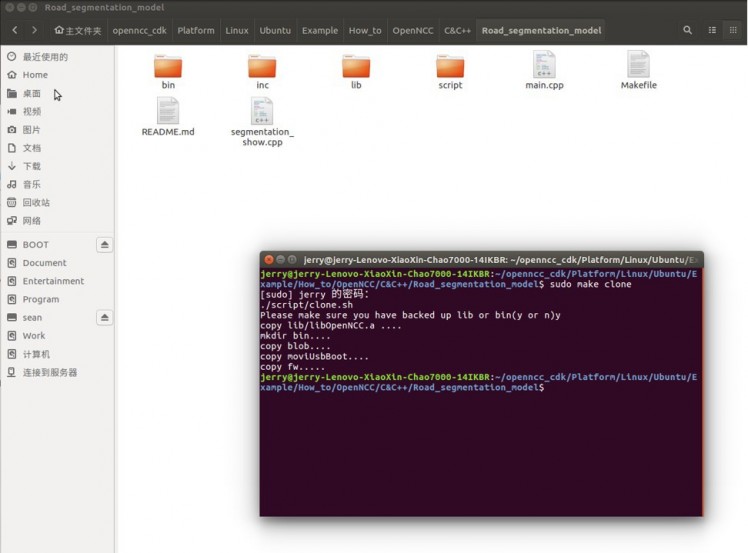

$ cd Example/How_to/OpenNCC/C&C++/Road_segmentation_model

$ sudo make clone

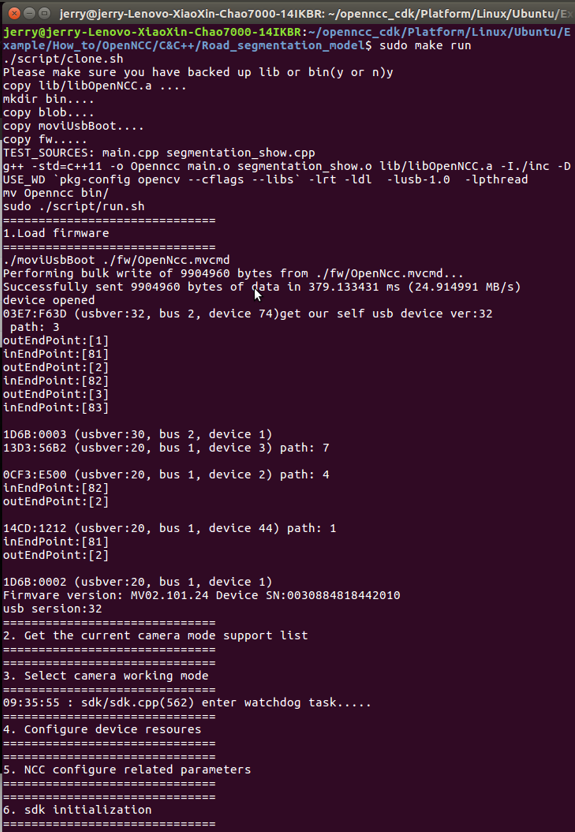

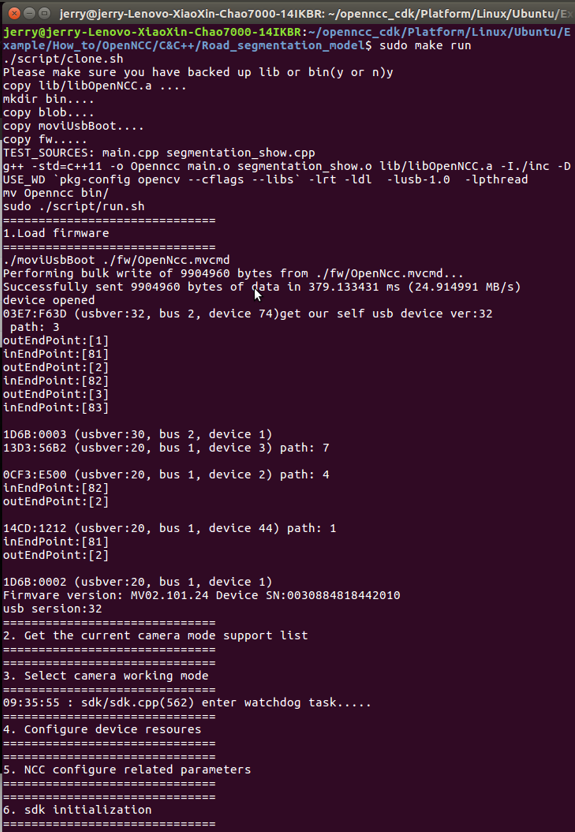

- Compiler and run

$ sudo make all

$ sudo make run

Running effect