ArUco Markers for Precise Pose Tracking in Robotics

About the project

Explore the fusion of ArUco markers and robotics for advanced pose tracking, enhancing precision and efficiency in machine vision.

Project info

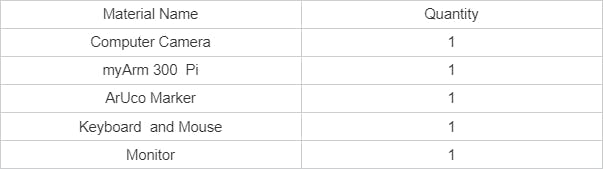

Items used in this project

Software apps and online services

Story

ArUco markers are a type of marker based on QR codes, which can be used for efficient scene recognition and location tracking. The simplicity and efficiency of these markers make them an ideal choice in the field of machine vision, especially in scenarios requiring real-time and high-precision tracking. Combining machine learning and advanced image processing technologies, robotic arm systems using ArUco markers can achieve more advanced automation functions, such as precise positioning, navigation, and execution of complex movements.

This case aims to demonstrate the high-precision control and posture tracking of a robotic arm by integrating ArUco markers and robotic arm motion control technology. By analyzing and interpreting different components of the script, this article will explore how to enhance the operational capabilities of a robotic arm through machine vision recognition technology and complex data processing algorithms. Additionally, it will showcase the ability of the robotic arm to capture and respond to environmental changes, and how to improve the overall system's efficiency and accuracy through programming and algorithm optimization.

The myArm 300 Pi, the latest seven-degree-of-freedom robotic arm from Elephant Robotics, is equipped with a Raspberry Pi 4B 4g chip and a custom Ubuntu Mate 20.04 operating system specifically designed for robotics. The myArm offers seven degrees of freedom, surpassing six-degree robots, allowing the robotic arm to move as flexibly as a human arm.

The myArm has built-in interfaces for highly complex elbow joint posture transformations. In practical teaching, it can be used for studies in robot posture, robotic motion path planning, management and utilization of redundant degrees of freedom in robots, forward and inverse kinematics, ROS robot development environment, robotic application development, programming language development, and low-level data processing, among various other robotics-related educational subjects. With nearly 100% hardware interface compatibility with the Raspberry Pi 4B and Atom end, it allows personalized scene development by pairing with individual Raspberry Pi 4B and M5 Atom peripherals, meeting different users' creative development needs.

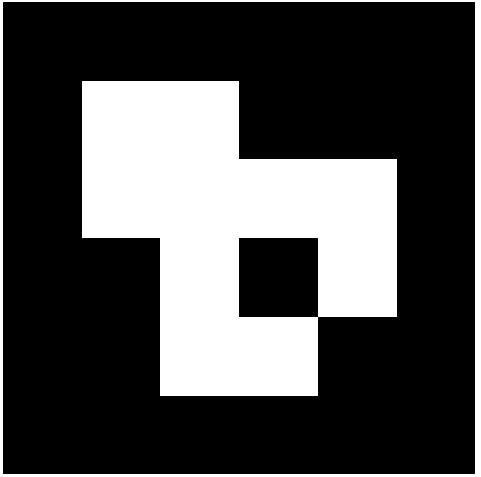

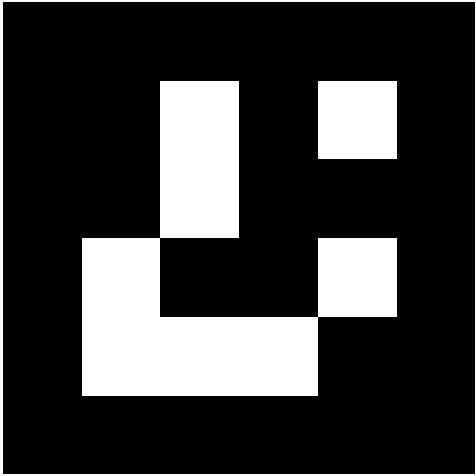

ArUco Marker CodesArUco markers are a two-dimensional barcode system widely used in the field of machine vision for marker detection and spatial positioning. These markers consist of black and white patterns, typically square in shape, with a unique binary pattern at the center that allows them to be quickly and accurately recognized by computer vision systems.

1 / 2

Characteristics of ArUco Markers:

- Uniqueness: Each ArUco marker has a unique encoding, allowing the recognition system to easily distinguish between different markers.

- Low Cost: Compared to other advanced positioning systems, ArUco markers do not require expensive equipment or complex installation. ArUco markers can be straightforwardly printed.

- Positioning and Navigation: In machine vision systems, ArUco markers serve as reference points to help robotic arms or mobile robots determine their own location or navigate to specific positions.

- Pose Estimation: By analyzing images of ArUco markers captured by cameras, the system can calculate the marker's position and orientation (i.e., pose) relative to the camera. This is crucial for precise control of robotic arms or other automated devices.

Operating System: Ubuntu Mate 20.04

Programming Language: Python 3.9+

Main Packages: pymycobot, OpenCV, numpy, math

- pymycobot: A library for controlling the movement of the robotic arm, offering various control interfaces.

- OpenCV: Provides a rich set of image processing and video analysis functions, including object detection, face recognition, motion tracking, and graphic filtering, among others.

- Numpy: A core library for scientific computing, it offers high-performance multi-dimensional array objects and tools for handling large data sets.

- Math: Provides a range of basic mathematical operation functions and constants, such as trigonometric functions, exponential and logarithmic functions, various mathematical constants, etc.

1. Definition: Pose tracking typically refers to monitoring and recording an object's precise location (translation) and orientation (rotation) in three-dimensional space, which is its "pose."

2. Technical Application: In the application of robotic arms, pose tracking involves real-time monitoring and control of the precise position and orientation of the arm's joints and end effector. This usually requires complex sensor systems and algorithms to achieve high-precision control.

3. Use: Pose tracking is crucial for performing precise operational tasks, such as assembly and welding in manufacturing, or surgical assistance in the medical field.

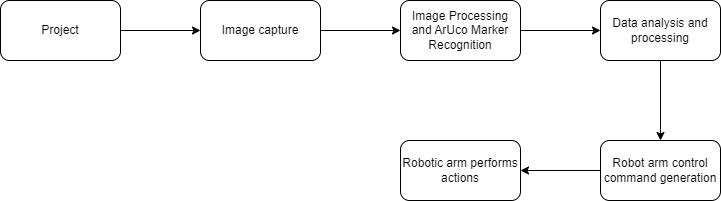

Project ComponentsThe architecture of the entire system is mainly divided into the following parts:

1. Hardware Composition: Consists of the robotic arm, USB camera, and other used devices.

2. Software and Control System: Utilizes OpenCV for ArUco marker recognition, control algorithms, and a system for controlling the motion of the robotic arm to implement the case.

3. Data Workflow: Involves capturing images, image processing, data analysis and transformation, and execution by the robotic arm.

1. Image Capture

Using OpenCV to capture images.

# Initialize camera

cap = cv2.VideoCapture(0) # 0 represents the serial number of the default camera

#Read image frames

ret, frame = cap.read()

#Show image frame

cv2.imshow('video", frame)

def capture_video():

cap = cv2.VideoCapture(0)

if not cap.isOpened():

print("Can't open camera")

return

try:

while True:

ret, frame = cap.read()

if not ret:

print("Can't read the pic from camera")

break

cv2.imshow('Video Capture', frame)

# enter 'q' quit

if cv2.waitKey(1) & 0xFF == ord('q'):

break

finally:

cap.release()

cv2.destroyAllWindows()2. Image Processing and ArUco Marker Recognition

Processing images captured by the camera and recognizing ArUco marker codes.

#detect ArUco marker

def detect_marker_corners(self, frame: np.ndarray) -> Tuple[NDArray, NDArray, NDArray]:

# gray

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

corners : Any

ids : Any

rejectedImgPoints : Any

corners, ids, rejectedImgPoints = self.detector.detectMarkers(gray)

return corners, ids, rejectedImgPoints

#Mark the ArUco codes in the image and draw the axes on each mark

def draw_marker(self, frame: np.ndarray, corners, tvecs, rvecs, ids) -> None:

# cv2.aruco.drawDetectedMarkers(frame, corners, None, borderColor=(0, 255, 0))

cv2.aruco.drawDetectedMarkers(frame, corners, ids, borderColor=(0, 200, 200))

for i in range(len(ids)):

corner, tvec, rvec, marker_id = corners[i], tvecs[i], rvecs[i], ids[i]

cv2.drawFrameAxes(frame, self.mtx, self.dist, rvec, tvec, 30, 2)

while True:

ret, frame = cap.read()

corners, ids, rejectedImgPoints = aruco_detector.detect_marker_corners(frame)

if ids is not None:

detector.draw_marker(frame, corners, tvecs, rvecs, ids)

ArucoDetector.draw_position_info(frame, corners, tvecs)

cv2.imshow('Video Capture', frame)

# enter 'q' quit

if cv2.waitKey(1) & 0xFF == ord('q'):

break3. Data Parsing and Processing

Since the case study involves pose tracking, it is necessary to detect the pose of the ArUco marker, annotating each rotation vector (rvec) and translation vector (tvec). These vectors describe the marker's three-dimensional position and orientation relative to the camera.

def estimatePoseSingleMarkers(self, corners):

"""

This will estimate the rvec and tvec for each of the marker corners detected by:

corners, ids, rejectedImgPoints = detector.detectMarkers(image)

corners - is an array of detected corners for each detected marker in the image

marker_size - is the size of the detected markers

mtx - is the camera matrix

distortion - is the camera distortion matrix

RETURN list of rvecs, tvecs, and trash (so that it corresponds to the old estimatePoseSingleMarkers())

"""

marker_points = np.array([[-self.marker_size / 2, self.marker_size / 2, 0],

[self.marker_size / 2, self.marker_size / 2, 0],

[self.marker_size / 2, -self.marker_size / 2, 0],

[-self.marker_size / 2, -self.marker_size / 2, 0]], dtype=np.float32)

rvecs = []

tvecs = []

for corner in corners:

corner : np.ndarray

retval, rvec, tvec = cv2.solvePnP(marker_points, corner, self.mtx, self.dist, None, None, False,

cv2.SOLVEPNP_IPPE_SQUARE)

if retval:

rvecs.append(rvec)

tvecs.append(tvec)

rvecs = np.array(rvecs)

tvecs = np.array(tvecs)

(rvecs - tvecs).any()

return rvecs, tvecsGiven the large volume of data captured, filters are used to improve detection accuracy. The filters employed include median filters, average filters, and second-order filters.

- Second-Order Filter: Used for precise control of signal frequency components, such as in signal processing and control systems, to reduce oscillations and improve stability, especially in pose estimation and precise motion control.

def median_filter(pos, filter, filter_len):

if not np.any(filter):

# If the filter is empty, fill the filter with pos

filter[:] = pos

# Add pos to the filter

filter[filter_len - 1] = pos

# Move elements in the filter

for i in range(filter_len - 1):

filter[i] = filter[i + 1]

# Calculate the median value and store it in the output array

output = np.median(filter)

return output

def Average_filter(pos, filter, filter_len):

if not np.any(filter):

# If the filter is empty, fill the filter with pos

filter[:] = pos

# Add pos to the filter

filter[filter_len - 1] = pos

# Move elements in the filter

for i in range(filter_len - 1):

filter[i] = filter[i + 1]

# Calculate the median value and store it in the output array

output = np.mean(filter)

return output

def twoorder_filter_single_input(input):

global prev1

global prev2

global prev_out1

global prev_out2

if np.array_equal(prev1, np.zeros(3)):

output, prev1, prev_out1 = input, input, input

return output

if np.array_equal(prev2, np.zeros(3)):

prev2, prev_out2 = prev1, prev_out1

output, prev1, prev_out1 = input, input, input

return output

fc = 20 # Hz cutoff frequency (designed filter frequency)

fs = 100 # Hz chopping frequency (sampling frequency)

Ksi = 10 # Quality factor

temp1 = (2 * 3.14159 * fc)**2

temp2 = (2 * fs)**2

temp3 = 8 * 3.14159 * fs * Ksi * fc

temp4 = temp2 + temp3 + temp1

K1 = temp1 / temp4

K2 = 2 * K1

K3 = K1

K4 = 2 * (temp1 - temp2) / temp4

K5 = (temp1 + temp2 - temp3) / temp4

output = K1 * prev2 + K2 * prev1 + K3 * input - K4 * prev_out2 - K5 * prev_out1

# Update global variables

prev2, prev1, prev_out2, prev_out1 = prev1, input, prev_out1, output

return outputThe system extracts pose information of the robotic arm or camera from the detected markers (such as ArUco markers) and filters the extracted angle data, ultimately obtaining the target's coordinates.

4. Generation of Robotic Arm Control Commands

For controlling the motion of the robotic arm, it's necessary to set its mode of operation.

# Set end coordinate system 1-tool

arm.set_end_type(1)

time.sleep(0.03)

# Set tool coordinate system

arm.set_tool_reference([-50, 0, 20, 0, 0, 0])

time.sleep(0.03)

# Set command refresh mode

arm.set_fresh_mode(0)

time.sleep(0.03)Upon obtaining the target coordinates, these coordinates must be communicated to the robotic arm to execute the corresponding commands. The control commands are generated based on the processed data, guiding the robotic arm's movements in real-time.

from pymycobot import MyArm

arm = MyArm("COM11",debug=False)

# Send coordinates to control robot arm movement

arm.send_coords(target_coords, 10, 2)The key technical aspects are primarily in the following areas:

-ArUco Detection:

The detection of ArUco markers is fundamental to the operation of the entire system. By recognizing these markers through a camera, the system can obtain critical information about the marker's position and orientation. This information is crucial for the precise control and operation of the robotic arm, especially in applications requiring accurate positional adjustments, such as in automation, robot programming, and augmented reality. Using image processing techniques, the OpenCV library identifies markers from images captured by the camera and extracts their position and pose information.

- Filtering Technology:

Filtering techniques are key to ensuring data quality and system stability when processing image data or data from robotic arm sensors. They help remove noise and errors from the data, thereby enhancing the system's accuracy and reliability.

- Robotic Arm Control:

Before implementing robotic arm pose tracking, it's essential to set its mode of operation. Ensuring that the robotic arm's movement matches the expected tasks and improving the precision and reliability of its operations are crucial. By adjusting the coordinate system, tool reference points, and the manner of command execution, the robotic arm can be made more suitable for specific operational environments and task requirements.

In this project, there is a deep exploration of the principles of image processing and machine vision, particularly in the areas of ArUco marker detection and pose estimation. Mastery of various filtering techniques and their applications is achieved, understanding their importance in enhancing data quality and system performance. Overall, this project presents a comprehensive practice in applying these aspects in a real-world application.

Credits

Elephant Robotics

Elephant Robotics is a technology firm specializing in the design and production of robotics, development and applications of operating system and intelligent manufacturing services in industry, commerce, education, scientific research, home and etc.

Leave your feedback...